How Cognitive Biases Effect Support For Life Extension

A cognitive bias is a systematic deviation from rational thought that can influence people’s reasoning without them even noticing. Several cognitive biases have been observed in studies over the past few decades, demonstrably influencing decision-making, self-perception, and preferences while affecting many human activities.

Naturally, cognitive biases have a measurable effect on the field of life extension advocacy, which is why it’s probably worth your time to have a primer on them, whether you’re an advocate seeking to improve your advocacy skills or simply undecided about life extension and trying to expand your understanding before you make up your mind.

The list below contains biases that most frequently influence people in relation to life extension. This isn’t an exhaustive list of cognitive biases in general, and unmentioned biases may affect people’s thoughts on life extension.

Index

You can jump to specific biases by clicking any of these links; to jump back up here, click the “^ Back to index ^” link at the bottom of each bias.

Availability heuristic

Availability heuristic

Aliases: —

External sources: Wikipedia, The Learning Scientists

Related logical fallacies: Misleading vividness

Description

The availability heuristic is a cognitive bias by which the likelihood of a given event is evaluated in terms of how easily someone can recall examples of the event itself happening before. Events that happened more recently are more readily available in our minds, so we are more likely to judge them more likely to happen again, disregarding other factors that may play a crucial role in assessing this likelihood.

General examples

Suppose that your neighbor’s apartment was robbed last week; understandably, this would make you feel less safe for a while, and you might start thinking about getting safety locks and extra alarms. The event is fresh in your mind, and this may alter your perception of its likelihood, making you conclude that perhaps your neighborhood is not as safe as you thought it was. However, this robbery might well have been the first one in 10 years, which would suggest that your neighborhood is actually quite safe.

How often something is reported on can also influence our ability to judge its likelihood. For example, a terrorist attack is likely to be vividly discussed in the media for a very long time, which might skew your estimate as to how likely such an attack is to happen in the first place. It’s also important to keep in mind that more sensational events are more present in people’s minds; if a crazy shooter were to kill a single person in an attack, this would make the news and probably be on your mind for a while; on the other hand, a person dying of a common disease would not have such an impact on you, even though you are far more likely to die of diabetes than being assaulted with a gun, at least in the United States. As a side note, terrorist attacks are tragic and spectacular, but they rank fairly low in terms of victims compared to pretty much any other cause of death.

Occurrences in life extension

The world is a very big place; if we assumed for the sake of argument that only one bad thing happened today in each country of the world, we would still be talking about hundreds of bad things. This means that, when you listen to the news, you are bound to hear about at least a few bad things; on the other hand, you won’t hear many positive things unless they are unusual enough to be newsworthy. This means that there are plenty of recent examples of bad things in your mind that can skew your perception of how likely these things are to happen, which leads to an overly pessimistic view of the world. It is this false image of the world that leads some people to conclude that our society is headed for disaster and that extending lives for the sake of living in an ever-worsening world is simply not worth the trouble. This is generally known as the dystopian future objection.

Backfire effect

Backfire effect

Aliases: —

External sources: Wikipedia, Effectiviology

Related logical fallacies: —

Description

The backfire effect arises when presenting evidence that contradicts a pre-existing conviction not only fails to convince whoever holds the conviction but makes them even more entrenched in the belief that their conviction is correct, rejecting the evidence. While this is expected to be more frequent when the pre-existing conviction is ideological or emotional in nature, it has been observed that the backfire effect is in fact quite rare—nonetheless, it’s good for life extension advocates to know that it exists and that they might bump into it in their advocacy efforts.

General examples

The word “nuclear” can be so scary that people fear anything nuclear; for example, they might fear nuclear fusion—the holy grail of energy generation—even though it’s physically impossible for a fusion reaction to propagate outside of the reactor as a fission reaction might. The idea that “nuclear is bad” is so entrenched in some people that even explaining why fusion is so much safer than fission might make them reject it even more strongly rather than convince them.

The same is true of GMOs—in some cases, people who are convinced they’re bad for you by default will not be persuaded by contrary evidence, which they might well dismiss as flawed or biased, just because it denies what they believe in.

Occurrences in life extension

The examples above can easily end up being part of a discussion about life extension, though a more specific one is that of overpopulation: the truly dominating factor of population growth is the birth rate, not the death rate, so simply eliminating age-related deaths is unlikely to cause significant population increase. However, the intuitive conclusion that fewer deaths equals overpopulation is hard to dislodge, and even if you do the math, you might end up making people even more entrenched in their belief that rejuvenation will cause overpopulation.

Bandwagon effect

Bandwagon effect

Aliases: —

External sources: Wikipedia, Effectiviology

Related logical fallacies: —

Description

The bandwagon effect is the tendency to “follow the crowd” — the more that an idea, belief, or opinion is already popular, the more people are likely to “hop on the bandwagon” and embrace it themselves, regardless of any valid reasons for or against doing so. There is more than one possible reason for this phenomenon, including fear of being left out of the majority or simply wishing for a shortcut that spares ourselves the trouble of thinking for ourselves.

General examples

The bandwagon effect can be rather harmless; for example, people might start supporting a certain sports team just because it happens to be winning a lot, and as the team gains more and more supporters, others join in, following the crowd. This is not so strange, considering that humans are social animals, and especially during our evolutionary history, it was beneficial for individuals to do all they could to preserve their position within their group, and conforming to the opinion of the majority could be a way of doing exactly that.

A rather surprising manifestation of the bandwagon effect is given by the famous Ashc’s conformity experiments. In these experiments, a number of test subjects were shown a picture with several black lines on it and were asked to tell which one was the longest. All but one of the test subjects were actors who were supposed to indicate the wrong line, and surprisingly, a significant amount of real test subjects agreed with the majority, even though the line they were indicating was clearly not the longest. The test subjects conformed to the prevalent opinion and hopped on the bandwagon, mostly for no other reason than a deep-seated instinct to not contradict the majority.

Occurrences in life extension

If you are talking about life extension to a group of people who are new to the subject, if the majority is against the idea, anyone on the fence might follow the majority regardless of doubts, especially if the majority is mocking the idea of life extension as silly or selfish — no one wants to come across as somebody who believes in something silly or selfish, so social pressure might push people to follow the majority for the sake of avoiding this possibility.

Of course, it is also possible that the bandwagon effect might actually help the idea of life extension to gain acceptance once a sufficiently large critical mass of supporters of the idea has been reached; at that point, more and more people might join in simply for fear of being left out, regardless of any personal conviction. Of course, it would be preferable if people would realize why life extension is a good thing rather than just hop on the bandwagon when the time comes, but we could be happy about this particular instance of this cognitive bias.

Belief bias

Belief bias

Aliases: —

External sources: Wikipedia, APA

Related logical fallacies: Argument from incredulity, Selective attention

Description

If you’ve ever accepted or rejected an argument only on the grounds of how reasonable or unreasonable its conclusion sounded, then you have most likely been a victim of belief bias. Our preconceptions about a given claim can easily mislead us when we try to assess the validity of any arguments presented for or against the claim itself; in other words, if I believe X is true, I might be skeptical of any argument against X precisely because in my perspective, it isn’t plausible for X to be false; conversely, if I am convinced that Y is false, I might reject an argument for Y because it doesn’t align with my worldview, in which Y is just not a thing.

People can have any reason to be biased towards a certain belief, and they don’t really matter here; what matters is the fact that whether or not something sounds plausible isn’t enough to discard arguments in favor or against it; you can make a bad argument for a true conclusion (X is true, but not because of what you’re saying), and you can make a logically impeccable argument for a false conclusion (X is false, and the reason why you thought it followed from your correct reasoning is that one or more of your premises was false).

It’s worth noting that sometimes you can take a shortcut and dismiss an argument because of the conclusion it leads to; in mathematics, this is called a proof by contradiction. If an argument leads to a conclusion that is provably false, then the argument must be wrong somewhere. The difference from the belief bias lies in the fact that, in this case, we don’t just hold a belief that the conclusion is false; we know it is. For example, if I stormed into your living room and said, “Twenty-eight is an odd number, and here’s why,” you really wouldn’t need to listen to my argument to know it’s wrong, because given the definition of an even number, it’s elementary to see that 28 is even, not odd. If my argument were correct, then it would contradict this simple fact; since 28 can’t be both odd and even, my argument must be wrong somewhere—the error may lie in the reasoning itself or in one of my premises, but, either way, we know there is one, even without knowing where or what it is. (This is an example of where an old joke about mathematicians comes from—sometimes, they’re content to know something exists, even though they don’t have the foggiest clue what it looks like.)

At this point, belief perseverance (also here and here) is worth mentioning—this bias is somewhat related to belief bias, and the difference between them is subtle; while having a belief bias means that you accept an argument for something just because it validates your pre-existing worldview or reject an argument because it doesn’t, belief perseverance is the tendency to stick to your original belief despite the fact that it was proven to be groundless.

A related bias is the famous confirmation bias—the tendency to prefer information that confirms what we already believe is true, especially in the case of emotionally charged or deep-rooted beliefs. For example, if a person has spent his whole life believing that aging is a generally good thing and then comes across the idea that aging is a bad thing that may and should be defeated, this person is subsequently more likely to look for more information about why aging is a good thing rather than the opposite. This bias occurs because we like to be reassured about our own beliefs, regardless whether they’re actually true or not.

General examples

Current evidence shows that climate change exists, but like with most global issues, there are fervent supporters and stalwart skeptics. If I came along and said, “Climate change is real because it was unusually warm last week!” a fervent supporter might nod approvingly, because this claim favors a cause he strongly believe in, but he’d be wrong. Climate is a much more general, planetary phenomenon encompassing much more than just the weather last week in my corner of the world; if climate change is real, we can reasonably expect it to throw local weather out of whack everywhere sooner or later, but the fact the weather over here was out of whack last week does not prove climate change. This was an example of a true conclusion that does not follow from a true premise of out-of-whack weather, because the reasoning was out of whack too.

An example of logically correct reasoning leading to a false conclusion because of incorrect premises is the following syllogism:

If the Earth was spinning, a helicopter hovering motionless in midair would be able to travel from one place to another, because the Earth would move underneath it.

Experimentally, helicopters can do no such thing.

Therefore, the Earth is not spinning.

If the premise about the hovering helicopter was true, so would be the conclusion that the Earth isn’t spinning. Unfortunately for flat-earthers, the premise is false because of this little thing called inertia, which is the same reason why if you throw a ball at along the same direction of flight of an airliner traveling at cruising speed, you don’t end up being hit in the face by a ball at 800 km/h—sometimes, even the laws of physics can be kind on others. If one already holds the belief that the Earth is flat rather than a spinning geoid, she can easily buy into the false premise (though, in her defense, the hovering helicopter problem baffles many people); conversely, if the flat-Earth hypothesis is a pet peeve of yours, you might be tempted to say that the reasoning above is wrong—the reasoning is fine; what’s wrong is the premise.

Occurrences in life extension

Attaining eternal youth has been a dream of humanity since the dawn of time, but we’ve never quite got there thus far. While now it appears we’re getting closer, the history of rejuvenation is studded with frauds, quacks, and most of all, failures on top of failures. This explains reasonably well why most people believe that rejuvenation is simply not possible; this is why you can expect them to be extremely skeptical when you go about explaining to them about rejuvenation research and experimental treatments. They have an instinct that making people younger is not possible, and based on this, they may dismiss any evidence to the contrary.

Other instances of belief bias related to life extension concern tangential, hypothetical issues—such as overpopulation or dystopian futures. Many people believe, for whatever reason, that there are too many people on this planet already and that it can only get worse or that we’re headed for a disaster of some sort, whether it’s the rise of a dictatorship, rampaging poverty, or an ecological catastrophe. While nothing is certain, some people only lukewarmly consider evidence that the future may be better than how the mainstream perception depicts it—this evidence goes against their preconception that the world will end up in the gutter, and they won’t easily accept it.

Curse of knowledge

Curse of knowledge

Aliases: —

External sources: Wikipedia, Harvard Business Review, Effectiviology

Related logical fallacies: —

Description

The curse of knowledge is a bias briefly worth mentioning for the sake of life extension advocates’ efforts. If you’ve ever tried to explain life extension science, or rebut an objection to rejuvenation, to somebody who apparently just didn’t get it, the fault might have been yours. You might be a victim of the curse of knowledge—the unintentional assumption that someone else possesses the necessary background to understand what you’re saying. The more educated you are on a given subject, the more likely you’ll be to use obscure technical jargon and omit important information that you don’t realize that other people might not know. When you’re very familiar with a topic or a concept, it might feel so easy and intuitive to you that you expect it to be for others as well; this happens often with relatively simple mathematical ideas that confuse the heck out of people who’ve never heard of them before.

You don’t need to be a Professor Emeritus to suffer from the curse of knowledge; you just need to be more familiar with a subject than other people. Try to keep this mind if, during your personal advocacy efforts, the people you talk often fail to understand you.

Diffusion of responsibility

Diffusion of responsibility

Aliases: —

External sources: Wikipedia, Psychology Today

Related logical fallacies: —

Description

More than a cognitive bias, diffusion of responsibility is a sociopsychological phenomenon that lies at the core of some cognitive biases (such as the bystander effect). Simply put, individuals in a group tend to feel less responsible to take action for the pursuit of a goal, be it resolving an emergency or achieving a longer-term objective. If they know that there are more people involved than just themselves, individuals tend to assume that others already have taken action, will take action, and will know better what needs to be done, and, in general, they feel less responsible for the consequences of inaction—after all, if you’re the only one witnessing a request for help, you’re obviously the one accountable for a crime of omission if you do nothing and things go awry; if you’re in a group when a situation arises, why should you specifically be held accountable? There are others too. That’s more or less what goes through our heads.

General examples

In Nordic countries, it is anything but unusual to see drunk people sleeping on the streets, especially on weekends. This is why a lot of people, when they see somebody lying still in an unusual place, assume he is “just drunk” and walk away, concluding that there’s nothing to be done. Aside from the fact that being so intoxicated that you’ve passed out isn’t free of danger—for all you know, a drunk lying still on the floor could be already dead—it’s not always safe to assume that someone is “just drunk”; the reasons that a person is knocked out may range from a hit on the head to hyperglycemia. Yet, it’s very easy to just pass by, assuming that, if something wrong is going on, somebody else already did or will do something.

Diffusion of responsibility gets worse as the size of the group involved increases, so you can imagine what happens when the group is the whole world; global causes often become “not my problem” for a lot of people, though in their defense, not everybody is in a position to tackle large-scale problems—even though, in most cases, simple methods of helping, such as donations, are available.

Occurrences in life extension

By now, it should be clear that the reason we’re even discussing diffusion of responsibility is because of the effects it can have on the life extension community. People who don’t support life extension to begin with are not at issue here, because they don’t feel that there’s any action to be taken to begin with; however, among supporters of a cause, it is not uncommon to have a fair number of “inactivists”—people who are onboard but do nothing or next to nothing to achieve the goal because they feel that other people are doing or will do what is necessary. In the case of life extension, there are people who are thankfully pushing for it, but we’re nowhere near a sufficient number of people getting actively involved, and as they say—the more, the merrier. (Wink-wink.)

End-of-history illusion

End-of-history illusion

Aliases: —

External sources: Original paper, Wikipedia

Related logical fallacies: —

Description

The end-of-history illusion is a phenomenon described in a 2013 paper by Quoidbach, Gilbert, and Wilson; in their study, they observed how people of all ages predicted that they wouldn’t change significantly in the next decade, despite reporting that they did change significantly in the previous decade. However, the changes in their personas that the nearly 20,000 study subjects reported ten years after their own predictions were more significant that they had anticipated, which induced the authors of the study to hypothesize that people might tend to see their present selves as their “final form”, set in stone pretty much forever.

According to the results of the study, the effect seems to be more pronounced at younger ages—for example, people in their late 20s had changed a lot more than they had predicted when they were in their late teens, whereas people in old age had changed much less—although discrepancies between the predicted and observed magnitude of the changes were observed at all ages.

The reason why this bias is worth mentioning in this context is that it may explain why many people are concerned that much longer lifespans might lead to “eternal” boredom. If you think that your future self will basically be just an older version of your present self, with no new interests, passions, values, or ideas, it’s understandable if you’re concerned that, in a few decades’ time, you will be irremediably bored. The end-of-history illusion shows that this concern could be unwarranted, as the changes that you’ll undergo are probably more significant than you think.

As said, the illusion was less pronounced in older people, which might seem to suggest that indeed, as you get older, you change less and less and might thus end up being bored to death past, say, age 110, but the elderly people in this study did not undergo rejuvenative therapies—we have no idea what the results might be for rejuvenated elderly people with the same brain plasticity of young adults.

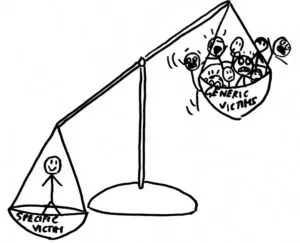

Identifiable victim effect

Identifiable victim effect

Aliases:

External sources: Journal of Risk and Uncertainty, Wikipedia

Related logical fallacies: —

Description

This is the tendency to care more for specific victims, who have faces, names, and relatable stories, than for large, vaguely defined groups, such as “children,” “the poor”, and “the elderly”. This may seem strange to you, given that children and the poor are guests of honor at the Worse Problems party that is often thrown whenever life extension is mentioned, but saying that worrying about the poor (or children) is more important than about life extension is one thing; actually helping the poor (or children) is another, and we are more likely to help a specific poor person or child in need than donate to charity because specific people are easier to empathize with.

General examples

Jessica McClure fell into a narrow well in 1987, when she was a little over a year old. The child ended up spending 58 hours trapped underground, and thankfully, she was eventually rescued and brought to safety, and she’s still alive and well. Even though she was just a single baby (whose life, make no mistake, was in no way less valuable than that of others), a monumental effort was put together to rescue her; she received the sympathy of the entire United States, and received donations for over $700,000 even months after being rescued, and a movie about her ordeal was produced. There’s absolutely nothing wrong with such a display of generosity and care, but as noted by the authors of the paper linked above, the same amount of resources devoted to a single person could be used to save hundreds—the authors of the paper make the example of preventative healthcare for children; if people are willing to do this much for a single life, you’d think they’d do much more for many lives, but as a matter of fact, raising money for the more abstract group of sick children is much harder than for a specific, unlucky girl who happened to go through such a terrible predicament.

Occurrences in life extension

“The elderly” are one of such unfortunate abstract groups which people tend not to relate to very much. If you hear on TV about a lone, elderly person who was robbed, abused, or abandoned, you are more likely to empathize and to feel compelled to do something to help if asked, but “the elderly” are an entirely different story; they are unidentifiable victims whom not many people feel compelled to help, whether in the traditional way or by supporting the development of rejuvenation therapies. (It’s also worth mentioning that inaction is often regarded as less morally despicable than harmful actions—which is known as the omission bias. This may be why some people don’t seem to see any moral issue with not developing rejuvenation, even if it’s feasible—allowing aging to cause the suffering and death of millions may appear less unethical than actively inflicting suffering and death.)

A bias similar to the the identifiable victim effect may also be at play when people nonchalantly say that “older generations need to make room for the new”, for example; it is cleverly masqueraded as a profound greater-good truth, but it’s basically a statement that old people must die. Many people have no problems agreeing with it when it’s the vaguely defined “older generations” that are supposed to check out, but they probably don’t feel that way about their beloved, dying grandmothers.

Illusory truth effect

Illusory truth effect

Aliases: reiteration effect

External sources: Wikipedia

Related logical fallacies: Alleged certainty

Description

This cognitive bias can be summarized as the well-known saying “Repeat a lie a million times and it’ll become true.” Because of the illusory truth effect, we are more likely to regard information as true after being exposed to it several times (a related effect, the mere-exposure effect, skews our preferences for things that we’ve seen more often), for essentially no other reason than the cognitive ease produced by the familiarity of the information.

General examples

“Everybody knows” that scientists can’t wrap their heads around how bumblebees can fly; unfortunately, that’s actually false. Still, it wouldn’t be surprising if you’d seen it on your Facebook feed so many times that you thought it was true.

Other common misconceptions that are often believed, by sheer force of repetition, are that evolution is a progression from inferior to superior species and that we evolved from chimps. Evolution is a process that favors features that are best suited to an individual’s environment, which has nothing to do with the individual being intrinsically superior; and we did not evolve from chimps—we share a common ancestor with them.

Occurrences in life extension

There is a number of false beliefs about aging and life extension that are repeated all the time, such as that aging also has good sides, that life extension is about prolonging decrepitude, that aging and age-related diseases are two different things—not to mention more general but still related beliefs, such as that natural is necessarily good or that we are not only already critically overpopulated but that saving lives will necessarily worsen the situation.

Negativity bias

Negativity bias

Aliases: Negativity effect

External sources: Wikipedia

Related logical fallacies: Worst-case scenario fallacy

Description

The negativity bias is the reason why bad news sticks with you longer than positive news; we all have a tendency to pay more attention and give more importance to negative things than to neutral or positive ones, even if their intensity is equal.

A possible explanation for this phenomenon might be evolutionary in nature. During the early days of human evolution, a negativity bias might have made the difference between life and death; for example, suppose you decided not to trust a member of the tribe and shunned him for a single act of disloyalty. Maybe an isolated incident could make you shun somebody who might have been helpful in the future, but this alone doesn’t endanger your own survival. On the other hand, if you did trust the disloyal member and then it turned out that he secretly sided with a rival tribe, this could spell the end of your own tribe—he could lure you all into an ambush.

Similarly, animals who take fewer risks may happen to pass up on a good chance to catch a nutritious meal, but this is not the immediate danger that taking more risks is. A flashy berry could be very good or very poisonous—if it’s the former and you pass up on it, you can still find other food; if it’s the latter and you eat it, that’s the end of the natural selection game for you.

General examples

If you hear on the news that a man was killed in your city, the news will likely remain vivid in your memory for a while, influencing your perception of how (un)safe your city is or, if you have a flair for the dramatic, how much in decline human society is. However, if you heard that the police managed to save a man from assault, you wouldn’t be likely to feel safer because your city has a good police force; you would probably be focusing on the fact that an assault took place. People usually notice that the glass is half empty rather than half full.

Another abstract example is that of the labeling of honest/dishonest people; a single criminal or dishonest act will be enough to label someone as “dishonest”, even for life, compromising this person’s chances to be trusted in the future. However, a single honest act by a known criminal isn’t going to be enough to change the perception that people have of him from “dishonest” to “honest”.

Occurrences in life extension

Given that risk avoidance can represent a survival advantage, it’s no surprise if the trait was mostly preserved in humans in the form of the negativity bias. Being skeptical about big changes and a preference for the familiar, well-tested status quo can save your life and your genes from oblivion, so an indiscriminate diffidence towards change is a safer bet than cost-benefit analysis skills—at least, it was a safer bet in our ancient history. Life extension is a big change—indefinite lifespans, or even just much longer lives, would require a rethinking of many aspects of our society, and the idea of such big changes pushes the buttons of our innate negative bias. The final product of this is the widespread belief that, ultimately, life extension might be more trouble than it’s worth, if not just an egotistical desire that will spell the end of humanity. Yes, some people are that dramatic about it, envisioning ecological catastrophes, gunfights between life extensionists and opponents, dystopian futures that make a long life pointless to live, and so on. Concerns that involve a dystopian future could be also due to a bias known as declinism—the feeling that a country, an institution, or, more generally, the world, is in decline and that things used to be better in the past. The existence of declinism doesn’t mean that the world can’t be in decline or that each time you find that a country is in decline, it’s because you’re affected by declinism, but the data shows that, in general, the world is doing better than in the past, not worse.

Optimism bias

Optimism bias

Aliases: Unrealistic optimism, comparative optimism

External sources: Wikipedia, ScienceDirect

Related logical fallacies: —

Description

The optimism bias causes some people to underestimate their likelihood to experience a negative event. The reasons may be many, such as wishful thinking or the illusion of having more control over a given situation than other people, but the result is always the same: people believe they have better chances than other people to avoid the negative consequences of certain actions or that specific risks are lower in their case without valid supporting evidence.

General examples

If you have a friend who justifies his recklessness as a driver because of his supposedly superior driving skills, you’re probably dealing with a case of optimism bias. Similarly, obese people who persist in eating unhealthy diets, dismissing the possibility of developing diabetes because “they feel fine”, most likely represent a very dangerous case of optimism bias.

Another example, somewhat tragicomic in nature, is that of a friend of a friend of mine, a smoker who is apparently convinced that “his body can handle smoke.” This is total nonsense, as there is no way he can sense whether or not smoke is inducing oncogenic mutations in his genome. If it weren’t depressing, such a statement would be amusing.

Occurrences in life extension

Optimism bias can manifest in people who think that their own aging won’t be so bad—probably nobody is so silly to think that he won’t age, but wishful thinking can induce people to think that they will be “healthy” old people with no major pathologies, perhaps even in spite of a history of poor lifestyle choices. We all wish to be healthy and not to suffer from major problems, and it makes us feel good to think that we will be healthy, but it’s a serious mistake to think that aging should make an exception for you specifically. You might or might not be luckier than the average older adult, but that is not set in stone, and without rejuvenation biotechnologies, you are guaranteed to suffer from at least one age-related condition that will kill you, even the exhaustion of pacemaker cells that tell your heart to beat, unless something else kills you first.

Optimism bias might also be the reason why people so readily equate rejuvenation with immortality: while everybody is certain to die of aging if nothing else, in general, nobody is certain to die of other things than aging; since people tend to underestimate their likelihood of experiencing negative events, such as accidental or ill-health-related death at younger ages, they might conclude that, if aging is taken out of the equation, their chances of ever dying of something else are negligible. This might or might not be the case, but again, it cannot be assumed lightly; in any case, rejuvenation does not change your odds of dying of non-age-related diseases one bit; therefore, it certainly doesn’t make you immortal.

Projection bias

Projection bias

Aliases: —

External sources: Wikipedia

Related logical fallacies: —

Description

Projection bias describes the tendency to let your current emotional states influence your estimates of future preferences; in other words, your present emotional state can and does interfere with how you think of something that might happen in the future.

General examples

A good example is that of a broken heart during adolescence. If you’ve had your heart broken by your high school crush, it wouldn’t be surprising in the least if, at that point, you thought that you’d never be able to fall in love again or that you’d never want to anymore, but that’s probably not what happened or even what your mood was like throughout the rest of your life.

Similarly, if you have an argument with somebody, sometimes you feel like you’ll wear a frown forever, and you might even end up carrying on the façade after you’re not angry anymore, just for the sake of not coming across as inconsistent. Immediately after a quarrel with someone, you might decide to cancel your participation in an event next week so that you won’t have to see that person, only to end up regretting the decision once your bad mood has subsided.

Occurrences in life extension

“Live longer and endure more misery? No, thank you!” You’re bound to bump into this kind of observation several times when discussing life extension (especially on social media), and this is pretty much a textbook example of the projection bias and a quite dangerous one at that. People who say this are letting their clearly negative present-day emotional state influence their estimate of whether they would like to be alive up to several decades in the future, when their general emotional state and perception of life are likely to have changed, and, more importantly, is a point in time about which they know nothing. Whatever the reasons that prevent them from appreciating life now might be, it is exceedingly unlikely that they will still hold true in the far future. To be fair, things could be worse and they could end up loving life even less, but this is not known, and they cannot reliably judge beforehand whether they would still like to be alive fifty years from now, especially if they’re in a bad mood.

Status quo bias

Status quo bias

Aliases: —

External sources: Wikipedia, ThoughtCo.

Related logical fallacies: Appeal to normality

Description

As the name implies, the status quo bias is an irrational preference for the current state of affairs, in the absence of a valid reason and sometimes even when the status quo is measurably harmful to oneself or others.

Like with many other human psychological phenomena, there are different possible explanations and no universal consensus; however, the status quo bias can be better understood in the context of the negativity bias—the tendency to perceive negative things more vividly than neutral or positive ones; changing the status quo inevitably introduces the risk of losses and regrets, which we seek to avoid even at the cost of passing up on opportunities for improvement. It is also possible that, through mere exposure, people perceive the omnipresent status quo as fair and the way things ought to be, and they engage in rationalization of any negative sides of the status quo in order to minimize cognitive dissonance. It must be pointed out that the status quo isn’t bad by default, and there certainly can be instances when refusal to abandon it can be rationally justified and isn’t a product of this bias.

If you would like to dig deeper, you might be interested in the more general context of system justification theory.

General examples

A somewhat trivial example is sticking to your favorite ice cream flavor, never trying any new one when you have the chance; your favorite flavor (the status quo) comes with a certain benefit, while any new flavor (that is, an alternative to the status quo) comes with a risk of not only missing out on a chance to enjoy your favorite flavor but also a risk that you will not like it at all. In this rather simple situation, the status quo bias might push you to avoid risks and losses by simply picking the same old ice cream you’ve always had.

Another example is a company that never updates its business practices, such as by always targeting a particular audience and never investing resources in finding new markets for fear of taking a loss.

Occurences in life extension

Even though death by old age is far more common today than it was in the past, it has existed since the dawn of our species, and virtually everybody who ever lived knew that aging would end her days if nothing else did it first; arguably, this makes aging the most glaringly obvious status quo in history, one to which everyone has constantly been exposed to; our entire society, and the way all human societies have ever worked, revolves around the rise and fall of our health. It’s not surprising at all that most people are biased towards the status quo of aging and believe that our current life trajectory—infancy, school, work, family, retirement, death—is the way things ought to be; we’ve never seen another way of doing things, we have been largely incapable of modifying this trajectory (or at the very least its endpoint), and we’ve had plenty of reasons to rationalize its undeniable negative effects (i.e. the decline of our health).

At this point, it’s important to notice a nuance in the way the status quo can be preserved in general and in the case of aging. Depending on the circumstances, maintaining the status quo can be energetically inexpensive—in order to preserve the current state of affairs, sometimes it is enough to do nothing that would change it. This is called psychological inertia—the act of not changing a situation and simply letting it unfold unperturbed. In other circumstances, maintaining the status quo requires action to oppose external forces that seek to perturb it.

What people currently need to do in order to preserve the status quo of aging is absolutely nothing. Aging will happen on its own accord, it’s definitely not going to cure itself, and rejuvenation biotechnologies aren’t going to develop themselves either. Psychological inertia is more than enough in this case, and people who want to snuggle into the delusion that getting sick with age is the way that things ought to go only need to bury their heads in the sand to forward their cause. This will change when current research and advocacy efforts will have moved the needle forward enough that the idea of rejuvenation will have gained traction and more and more therapies are in the clinic or close to clinical translation; at that point, the last stronghold supporting the status quo of aging will have to actively oppose the change to prevent it from taking place, which we most definitely hope won’t happen—with some luck, psychological inertia will prevail once more, and they’ll just sit about and let rejuvenation happen, even if they don’t approve of it.